Nebius AI Studio Verified

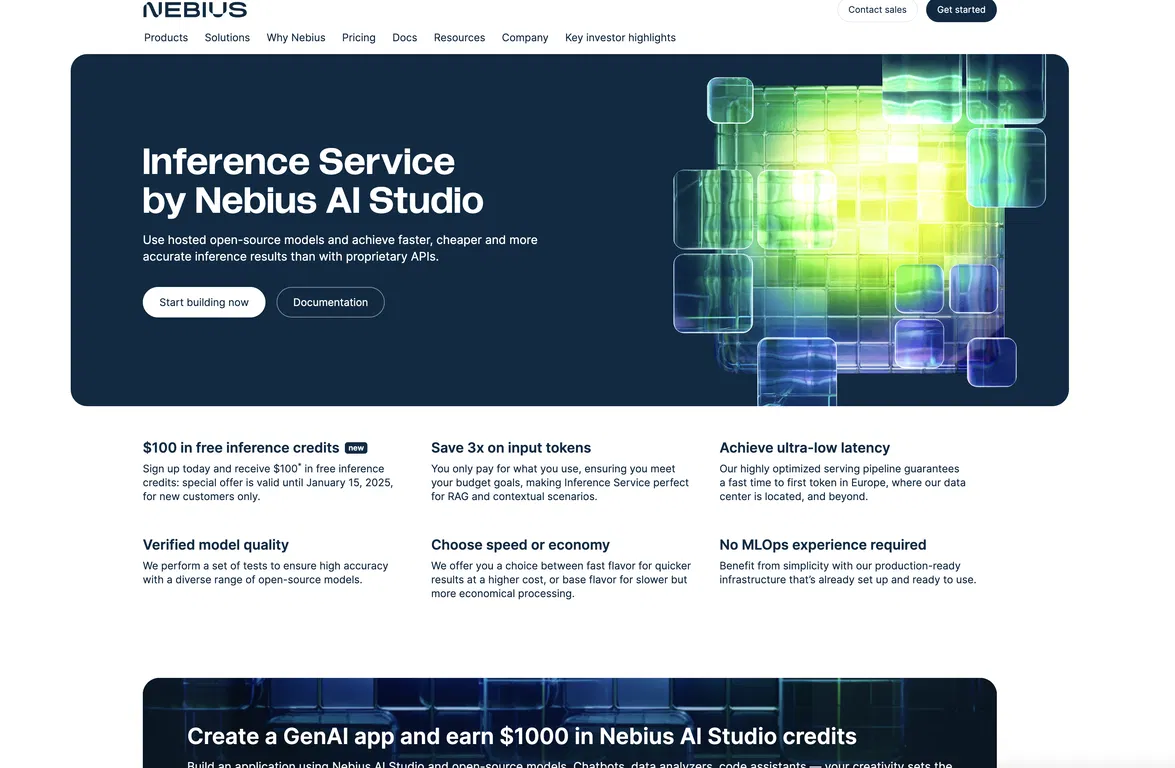

Nebius AI Studio offers a cutting-edge AI Inf...

Host open-source models with Nebius AI Studio for fast, cost-efficient inference without MLOps expertise.

Nebius AI Studio offers a cutting-edge AI Inference Service featuring hosted open-source models that deliver fast, accurate, and affordable AI inference. Tailored for users without Machine Learning Operations (MLOps) expertise, Nebius provides production-ready infrastructure, a variety of open-source models, and a user-friendly interface for streamlined deployment. With options for ultra-low latency in Europe, users can choose between fast, premium processing or cost-effective, standard-speed solutions. The platform also rewards app developers with credits and offers access to a range of models like MetaLlama and Ai2OLMo, making AI deployment more accessible and scalable.

Key Features:

Open-Source Model Library – Access a diverse range of models like MetaLlama, Ai2OLMo, and DeepSeek.

Production-Ready Infrastructure – Launch without MLOps experience; infrastructure setup is pre-configured.

Ultra-Low Latency in Europe – Optimized processing speeds for Europe-based users through a local data center.

Flexible Processing Speed Options – Choose between faster, premium options or cost-saving, slower processing.

App Development Credits – Earn Nebius credits for building applications using hosted models.

Advantages

- No MLOps expertise required; production-ready infrastructure simplifies deployment.

- Diverse open-source model library supports various AI applications.

- Low-cost processing options make it budget-friendly.

- Ultra-low latency for faster processing, especially in Europe.

- Credit incentives for developers building on Nebius AI Studio.

Limitations

- Premium speeds come at a higher cost.

- Some models may have limited availability outside Europe.

- Credits are available only for new users or select applications.